http://www.chicagotribune.com/bluesky/originals/chi-moshe-tamssot-holograms-bsi-20141205-story.html

A Chicago entrepreneur and the fate of a private hologram collection

Saturday, December 6, 2014

Robotic Micro-Scallops Can Swim Through Your Eyeballs

Robotic Micro-Scallops Can Swim Through Your Eyeballs

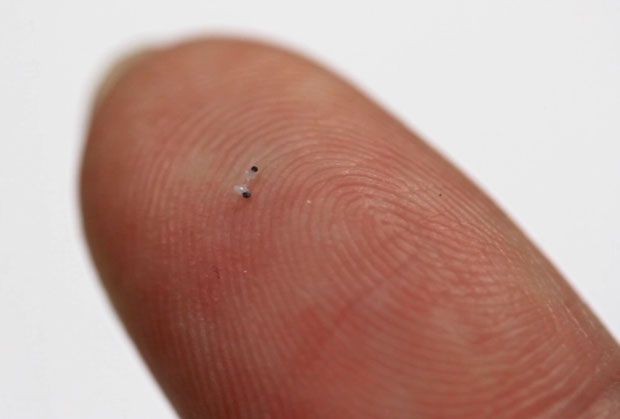

Image: Alejandro Posada/MPI-IS

An engineered scallop that is only a fraction of a millimeter in size and that is capable of swimming in biomedically relevant fluids has been developed by researchers at the Max Planck Institute for Intelligent Systems in Stuttgart.

Designing robots on the micro or nano scale (like, small enough to fit inside your body) is all about simplicity. There just isn’t room for complex motors or actuation systems. There’s barely room for any electronics whatsoever, not to mention batteries, which is why robots that can swim inside your bloodstream or zip around your eyeballs are often driven by magnetic fields. However, magnetic fields drag around anything and everything that happens to be magnetic, so in general, they’re best for controlling just one single microrobot robot at a time. Ideally, you’d want robots that can swim all by themselves, and a robotic micro-scallop, announced today in Nature Communications, could be the answer.

When we’re thinking about robotic microswimmers motion, the place to start is with understanding how fluids (specifically, biological fluids) work at very small scales. Blood doesn’t behave like water does, in that blood is what’s called a non-Newtonian fluid. All that this means is that blood behaves differently (it changes viscosity, becoming thicker or thinner) depending on how much force you’re exerting on it. The classic example of a non-Newtonian fluid is oobleck, which you can make yourself by mixing one part water with two parts corn starch. Oobleck acts like a liquid until you exert a bunch of force on it (say, by rapidly trying to push your hand into it), at which point its viscosity increases to the point where it’s nearly solid.

These non-Newtonian fluids represent most of the liquid stuff that you have going on in your body (blood, joint fluid, eyeball goo, etc), which, while it sounds like it would be more complicated to swim through, is actually anopportunity for robots. Here’s why:

At very small scales, robotic actuators tend to be simplistic and reciprocal. That is, they move back and forth, as opposed to around and around, like you’d see with a traditional motor. In water (or another Newtonian fluid), it’s hard to make a simple swimming robot out of reciprocal motions, because the back and forth motion exerts the same amount of force in both directions, and the robot just moves forward a little, and backward a little, over and over. Biological microorganisms generally do not use reciprocal motions to get around in fluids for this exact reason, instead relying on nonreciprocal motions of flagella and cilia.

However, if we’re dealing with a non-Newtonian fluid, this rule (it’s actually a theorem called the Scallop theorem) doesn’t apply anymore, meaning that it should be possible to use reciprocal movements to get around. A team of researchers led by Prof. Peer Fischer at the Max Planck Institute for Intelligent Systems, in Germany, have figured out how, and appropriately enough, it’s a microscopic robot that’s based on the scallop:

As we discussed above, these robots are true swimmers. This particular version is powered by an external magnetic field, but it’s just providing energy input, not dragging the robot around directly as other microbots do. And there are plenty of kinds of micro-scale reciprocal actuators that could be used, like piezoelectrics, bimetal strips, shape memory alloys, or heat or light-actuated polymers. There’s lots of design optimizations that can be made as well, like making the micro-scallop more streamlined or “optimizing its surface morphology,” whatever that means.

The researchers say that the micro-scallop is more of a “general scheme” for micro-robots rather than a specific micro-robot that’s intended to do anything in particular. It’ll be interesting to see how this design evolves, hopefully to something that you can inject into yourself to fix everything that could ever be wrong with you. Ever.

Friday, December 5, 2014

cicret bracelet

At the beginning, there was a simple sketch…

…then a first moulding.

Now it’s a dream we want to share with you.

With the Cicret Bracelet, you can make your skin your new touchscreen.

Read your mails, play your favorite games, answer your calls, check the weather, find your way…Do whatever you want on your arm.

We have 2,364 donors as of today! Join them.

We need 300,000 euros to develop the CICRET APP on all the platforms.

We need 700,000 euros to finish the first prototype of the CICRET BRACELET.

So feel free to donate an amount of your choice.

If everyone gives us 1 euro, we will make it and release our products!

For a donation to our paypal account, fill in the form below.

VISUAL REVOLUTION OF THE VANISHING OF ETHAN CARTER

VISUAL REVOLUTION OF THE VANISHING OF ETHAN CARTER

25 MARCH 2014 | AUTHOR: ANDRZEJ POZNANSKI | CATEGORY: ETHAN CARTER, GAME DESIGN

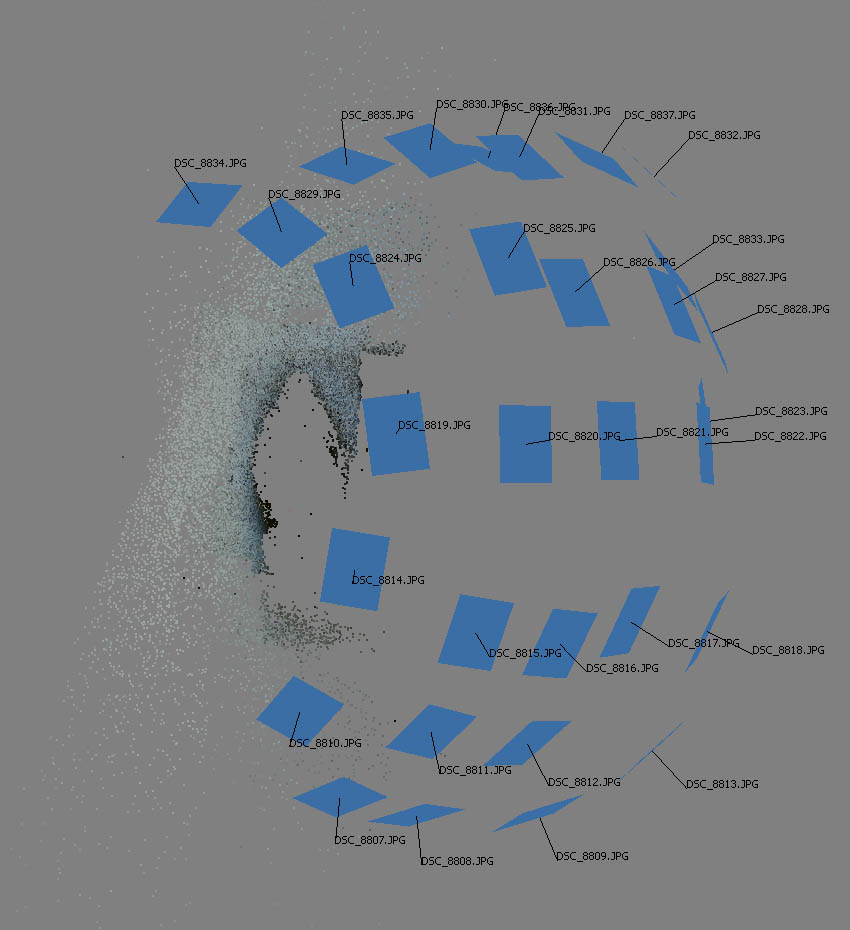

This……is not a photograph. It is actual 3D model of a church from our game. Before I tell you all about how we created such photorealistic assets (and, maybe surprisingly, why we are not using them in fully photorealistic way in our game), let us think about what makes it look real.Look around you – do you see any tiling textures?You shouldn’t, but if you do – look closer. That brick wall or those floor tiles are not, contrary to popular belief, a textbook definition of tiling. Look how some edges are more worn out than others, how some parts seem smoother than others, how dirt and dust settled in certain areas. Some parts may be chipped off, some areas stained, on some parts mold or rust started to settle… Ok, maybe those last ones are not in your fancy neighborhood, but you get the point.And it’s all not random either. If you really wanted it, you could probably make sense of it all. The floor might be more worn out around the front door, or where your chair wheels constantly scrub a patch of the floor, and the outer wall might be darker from the side that gets hit by the rain more often, etc.You could make sense of it all, but who cares? Your brain usually doesn’t – it’s real, it’s normal, nothing to get excited about. However, your brain does take notice when things are not normal. Like in video games. Even if on the unconscious level, your brain points out to you all those perfectly tiling textures, all those evenly worn-out surfaces, those stains placed in all the wrong places – and whispers in your ear: LOL!I’m really proud of Bulletstorm — I directed all of environment artist’s efforts — but well, sometimes art was just as crazy as the game itself. Take a look at this floor surface, does it seem right to you?It’s not that technical limitations prohibit developers from creating things that feel right, not on modern PCs and consoles anyway. The problem – if we put time and money constraints aside – is that more often than not the graphics artists’ brains don’t care about the conscious analysis of reality either. We can be as quick as you to point out unrealistic looking asset in other games, but we rarely stop to think about our own work. Need to make a brick wall texture? Basically a bunch of bricks stuck together? Old-looking? Sure thing boss! If we’re diligent, we may even glance at a photo or two before we get cracking. But even then, quite often we look but do not see.In The Vanishing of Ethan Carter, you’ll see some of the most realistic environment pieces ever created for a video game. Assets are no longer simplistic approximations of reality – they are reality. But it’s not that somehow we have magically rewired our brains to be the ideal reality replicators…Enter photogrammetry.With photogrammetry, we no longer create worlds while isolated from the world, surrounded by walls and screens. We get up, go out there and shot photos, lots of photos. And then some. Afterwards, a specialized software — we are usingPhotoscan from Agisoft — looks at these photos, and stares at them until it can finally match every discernible detail from one photo to same exact feature in other photos taken from different angles. This results in a cloud of points in 3d space, representing real world object. From there, the software connects the dots to create a 3d model, and projects pixels from photographs to create a texture.I’ll spare you the details about Lowe’s Scale Invariant Feature Transform (SIFT) algorithm, solving for camera intrinsic and extrinsic orientation parameters and other details of what that software does – all that matters is that you feed it withgood photos taken around some object and you get the exact replica of that object, in 3D, in full color, with more detail than you could ever wish for.Take a look at this slideshow to better understand the process of turning a bunch of photos into an amazing game asset:You can find extra video in today’s post on our Tumblr.Photogrammetry is incredible. I have been making games for 20 years, I have worked with amazing talented artists on huge AAA blockbusters like Bulletstorm or Gears of War, and you could say I am not easily impressed in the art department. But each new photoscan gets me. So much detail, so many intricacies, but most importantly, all of them just make deep sense. Cracks, stains, erosion – Mother Nature has worked a billion years on some of these assets, it’s almost unfair to expect comparable quality from artists who spend no more than few days on similar assets.Enough convincing, see it for yourself. We have found a pretty new and unique way to let you experience photogrammetry of The Vanishing of Ethan Carter. What follows below is not just static screenshots of our game assets. After you click on the image, a 3D object is loaded, then the texture downloads and voila, you get to experience early glimpse into our game world.You can orbit, pan and zoom camera around these objects, or just sit back and let the automatic rotation showcase them. I highly recommend to take charge of camera (hold down LMB, rotation direction depends on the position of the mouse pointer in the image), especially after going full screen, to fully experience the detail and quality of these assets.Speaking of quality – these are raw unprocessed scans, and some of them are actually lower res than what we have in the game.Twenty six photos covering same boulder from every possible angle, and you get this:39 photos taken around a cemetery statue, and you get this:Photogrammetry gets confused by moving subjects. If you want to scan human face, even tiny head/facial muscles movement between photos means errors in scan and lots of manual cleanup and resculpting. But you can get around that issue, with this:Fake plastic hand is optional. You set up the gear like this:You fire all cameras and studio flash lights at exact same millisecond and you get this:You don’t need to always cover entire object in a photo, you can shoot in chunks, as long as photos overlap enough. This lets you go bigger – 135 photos (over three gigapixels of data!) and you get this sizable rock formation:With enough skill you can scan entire locations – over two hundred photos (around five gigapixels) and you get this waterfall location, complete with water frozen in time. Before you ask, no, water in our actual game is not still.Want to go bigger? How about entire 100 feet of quarry wall? 242 photos (almost six gigapixels!) and you get this:How about bigger than that? How about the entire valley? This one took probably around one zillion photos. Note that for gameplay reasons we actually discarded half of the valley scan and built the rest ourselves, so here you can only see the scanned half, duplicated.Any bigger than that and we’d have to shoot from the orbit, so that’s where we drew the line.At this point you might be tempted to think “anyone can snap few pics, dump them into software and wait for finished game level to come out”.Sadly, things are not that simple. For starters, if you are not really skilled at scanning, you often get something like this:Or, when you are trying to scan a person next to a wall, you get this:All these dots – wall surface with scanned person’s shadow. But actual scanned person is nowhere to be seen. Not really what we were after, unless we wanted to scan a ghost.It is relatively easy to get into photogrammetry, but it is really difficult to masterit.Most people know how to snap photos, but very few know this craft really well, and even for those few, a change of mindset is required. You see, most of things that photographers learn is the opposite of what photogrammetry requires.Shallow depth of field (DOF) looks sweet and helps our minds process depth information on a 2D photo, but photogrammetry absolutely hates it. This ties directly to shutter speed and ISO settings. What is widely accepted by photographers, on pixel level may actually produce enough camera shake or scene/background movement and ISO noise to mess up your scan.It helps if you can control lighting but again, lighting that works for normal photography might kill your scan instead of improving it. You absolutely need to avoid high contrast lighting – even if you think your camera can record enough dynamic range to capture detail in both highlights and shadows, photogrammetry software will strongly disagree with you. Also, anything that brings out highlights in your photos (usually a desired thing), will yield terrible results.Everything in your photos should be static, including background and lighting. Imagine how hard it is to get the wind to stop shaking those tree leaves and grass blades, or how hard it is to convince the sun to conveniently hide behind clouds.When we were taking trips to the mountains, we were met with more than a few raised eyebrows for scoffing at perfect sunny weather and for summoning the clouds. Well, consider this – you’re doing Van Damme’s epic split (well, almost) trying to balance your feet on some boulder rock formation, and then the sun shows up. You have to stop taking photos, and keep balancing your body in this position for next 30 seconds to 5 minutes waiting for another cloud. Ouch.I could go on for hours about the challenges of acquiring imagery for photoscanning, but don’t be fooled into thinking that it ends there, with “simply” getting perfect photos.You often need to mask out and process photos in a number of ways. Sometimes you need to manually help align photos when the software gets confused, and then, fingers crossed – software will finally spit out an object. As you have seen in our raw samples above, there might still be errors and gaps that you need to fix manually. Then you either accept okay texture quality or begin long, tricky and tedious work of improving the texture projection and removing the excessive lighting and shadowing information to achieve perfect looking source object.Job done? Still far from it.Scanned object will usually weigh between 2 and 20 million triangles. That is the entire game’s polygon budget, in a single asset. You need to be really skilled at geometry optimization and retopology to create relatively low poly mesh that will carry over most of original scan geometry fidelity. Same thing with the texture – you need amazingly tight texture coordinates (UVs) to maximize the percentage of used space within the texture.But even then you will most likely still have something 4-16 times larger than what you’d like. At The Astronauts, we have come up with a compression trick that let’s us achieve better compression ratio than industry standard DirectX DXT compression, with very minimal quality degradation. This helps a bit, but we still need to make miracles with texture/level streaming. Thank gods for heaps of memory on modern GPU hardware.You are probably tired from just reading about how much work it is to get the most out of photogrammetry, and will probably scratch your head when I tell you we’re actually not going for full photorealism. Take a look at this shot of a certain old house in our game:All this work to make true-to-life assets and then we ‘break it’ with somewhat stylized lighting and postprocessing, and mixing scanned assets with traditionally crafted ones? What’s up with that?We didn’t scan all these assets for you to inspect every tiny pixel and marvel at technical excellence of our art pipeline. We went through all the hard work on these assets so that you can stop seeing assets and start seeing the world. Feeling the world. It may look photorealistic to some, it may seem magical to others, but if we did our scanning right, it should above all, feel right.And the end of the day, photogrammetry is a tool. Nothing less, but nothing more. It’s still up to designers and artists to decide what kind of world they are creating, and on what journey they want to invite the players.In our case, we’re making a weird fiction game, a dark tale, and not a documentary — and this needs to be supported by a certain visual style that goes beyond photorealism (and that is sometimes a bit harder to achieve than pure photorealism).And on that cue, here is a brand new screenshot from the journey that The Vanishing of Ethan Carter offers, an example of our artistic choice. Additionally, here are high quality wallpapers: 1920×1080 (16:9) and 2560×1600 (16:10).

Thursday, December 4, 2014

Final Exam = Monday -- 15th of Dec 6:30- 9:20

Final Exam = Monday -- 15th of Dec 6:30- 9:20

Wednesday, December 3, 2014

Tuesday, December 2, 2014

Google Cardboard Access / IOS accounts

Hey guys-

If anyone wants to use the Google Card Board please ask and you can borrow it. We might want to cut one more after learning from our first attempts. I forgot to ask if anyone wanted to use it.

Last call on the free IOS accounts. If you are interested and have not contacted me read the old post on this and get me the correct info.

Thx!

Mark

If anyone wants to use the Google Card Board please ask and you can borrow it. We might want to cut one more after learning from our first attempts. I forgot to ask if anyone wanted to use it.

Last call on the free IOS accounts. If you are interested and have not contacted me read the old post on this and get me the correct info.

Thx!

Mark

Subscribe to:

Posts (Atom)