This is Facebook’s Oculus moment for AR

A few days ago, Facebook held it’s annual F8 conference. This is typically the time of the year where Facebook announces its most exciting products and vision for the year ahead. And in 2017, it is all about AR.

And boy, is there is a lot to unpack.

Zuckerberg began the keynote by revealing how Facebook will help make the camera the first major platform for augmented reality. In doing so, the company will realise the vision of many ghosts of AR-past, mainly, to become the universal portal to discover and contribute to the augmented world.

But what does this really mean? What exactly has Facebook achieved in this space so far? Where does this leave AR startups now?

Here’s what we know.

Facial Tracking

In September 2015, Snapchat brought AR to the masses with their ‘lenses’ feature. Using the app, Snapchat users could augment their faces, making everyday moments more fun.

Whilst Snapchat may have been first to market, Facebook wants to dominate and it will do this by empowering a creator community to create their own unique filters and effects, through Facebook’s newly announced AR Studio.

However, face tracking is just the beginning.

Positional Tracking

To move from face filters to environment filters, it’s vital to understand the position and orientation of devices used to display that content. Zuckerberg explains that the solution to this problem is an AI technique called ‘simultaneous localisation and mapping’ or SLAM for short.

There are several companies who have successfully demonstrated relative positional tracking with various degrees of success. Indeed, in an effort to dampen the significance of Facebook’s announcements, Snap released a version of positional tracking into their Snapchat app shortly before the F8 conference.

Whether or not the implementations from Facebook or Snap are true SLAM systems is a complicated discussion is probably best suited for another post. However, it is safe to say, that both Facebook & Snap seem to have set their sights on augmenting the rear-facing camera in addition to the front-facing camera. The question is, what will help each company win?

Deep Learning

One the areas Facebook has truly innovated upon is the optimisation of deep convolutional neural networks aka. ‘CNNs’ or ‘convnets’. These convnets are a subsection of artificial intelligence, which have shown tremendous improvements over more traditional methods in recent years.

In 2016, Facebook took an existing framework for deep learning called ‘Caffe’, and adapted the framework to run on mobile.

At this year’s conference, Facebook announced the framework’s next iteration, Caffe2, built for mobile from the ground up.

The first application of this newly optimized framework demonstrated realtime style transfer, where the aesthetic style of one photo was applied it to the camera feed. This is a technique that apps like Prismahave already demonstrated to great effect (where are they now?).

Object Recognition

In addition to being able to transfer the style of one image onto another, convnets are also effective at identifying objects within a scene, the same way that humans do. This is referred to as semantic recognition and is an idea that AR company Blippar has been experimenting with in recent iterations of their mobile app.

The way Blippar achieves object recognition seems to be a cloud-based approach, where the video feed is analysed remotely on a server. With Facebook’s optimisation of convnets for mobile however, it is conceivable that Facebook could eventually perform robust semantic recognition on the device locally, leading to large improvements over speed.

Depth estimation from 2D images

Another area where convnets have shown promise is for estimating 3D depth from 2D images.

This is something humans are pretty good at. For example, if we sit still and close one eye, or look at a photograph, we have a pretty good idea about the scale of objects within a scene. This is because we understand the sizes that those objects are most likely to be.

With enough training data, neural networks can also take advantage of this understanding, in order to infer 3D depth maps from a single images.

This has several consequences.

Firstly, it allows rich 3D visual effects to be applied to images and video after they have been created, without having to capture additional sensor information from the accelerometer or gyroscope of a device. If the algorithms for depth estimation are too intensive to run at 30fps, perhaps this is a good place for Facebook to start. It also provides the option to run the algorithms remotely on a server if needs be.

However, if Facebook can get depth estimation running at runtime, it provides several additional capabilities beneficial for AR, like being able to infer occlusion masks, or to increase the stability and robustness of their camera tracking.

SLAM + Object Recognition

Things get really exciting when you combine traditional SLAM techniques with object recognition within a given environment. By doing this, single camera devices will be able to display rich AR experiences that react to objects in the world around them.

For example, in the video above, Facebook demonstrates a mobile device tracking its position in space, whilst recognising that a cereal bowl and glass of orange juice is sat on the table. This in turn affects the AR content which is shown, whilst providing the ability to make virtual copies of the recognised objects within the scene.

The results of this are strikingly similar to the work of PhD researchers Richard Newcombe et al, who were working on an algorithm called SLAM++ before their company, ‘Surreal Vision’, was acquired by Facebook/Oculus for an undisclosed sum in 2015.

Whilst I have doubts over the legitimacy that the video from F8 was recorded on a mobile without post-editing, I have no doubt that Facebook is working hard on making these algorithms robust enough for eventual product release.

Reality Capture

An area of particular interest for Facebook is how to recreate or capture realistic scenes for virtual reality.

Following this aim last year, Facebook announced the Surround 360 — Facebook’s open source stereoscopic 360-degree camera and software stack. By open-sourcing their camera design & stitching algorithms whilst promoting 360-degree video within Facebook Timeline & Oculus, the company have been able to corner part of the market without having significant production cost.

But for VR, stereoscopic 360 video isn’t enough for complete immersion.

Research by Sanford showed that VR needs to have 6 degrees-of-freedom (i.e. the ability to actually move within a scene, as opposed to just being able to rotate your head) in order to fool the brain into believing that the environment is real.

At this year’s F8, Facebook announced the next versions of the Facebook Surround 360, the x24 & the x6.The cameras, whose names refer to the number of lenses each system has, seem to have taken inspiration from the way old omnipolar cameras captured stereo several years ago.

In these configurations, any single point in space has at least 3 cameras covering the area. By triangulating each of the points, a 3D understanding or ‘depth map’ of the scene can be created. This depth map can in turn be used to provide 6 degrees-of-freedom to optimise 360 degree video for VR.

Why is this important for AR?

Creating cameras that understand the depth of their environment is vital for enabling rich AR experiences.

By promoting the use of their new camera infrastructure, Facebook are not only completing the circle between Oculus & Facebook Timeline, they are also potentially creating a huge amount of training data which could eventually allow regular 360 degree cameras to infer 3D depth information from a 2D video feed.

Need another sign that Facebook is serious on 360 degree imagery?

Through a partnership with 360 camera company Giroptic, Facebook handed a 360 degree camera to every developer in attendance of the F8 conference.

Oh, and one more thing…

On the second day of Facebook’s conference, executive Regina Dugan casually asked the audience “so what if you could type directly from your brain?”.

As with most new technologies, this is a prospect that seems like science fiction. But it’s an area that has recently been gathering significant momentum.

Dugan explains that Facebook’s aim is to create a system that allows users to type 100 words per minute (5x more than you can type on your smartphone), through brain activity alone. And the company want users achieve this without having to undergo brain surgery in advance.

The examples Facebook gave of why this would be a good idea involved restoring communication to people living with ALS.

But to really understand why Facebook might want to develop this, we must combine this with our understanding of the company’s ambitions in AR.

Right now, we have a various non-invasive methods to measure the brain’s responses to specific stimuli. However, whilst we can measure activity, researchers still struggle to make sense of the data collected.

And this is where AR could be the missing link.

With an AR head-mounted-display, you are literally giving hardware providers access to your entire field of view. Not only will will these devices have cameras looking outwards to track the environment, they will also have cameras looking inwards to track the position of your eyes. In fact at CES earlier this year, I was blown away by the eye tracking capability of a company called Tobii, who had already built this capability into a modified VR headset to great effect

This means companies like Facebook could have access to not only your entire field of view, but also your area of focus, at any one time. Pair this up with the ability to measure acute brain activity and you suddenly have a system where stimulus & response can be measured from millions of individuals, 24/7, from all around the world.

The consequences, both positive and negative, of gathering this data and mining it for your likes, dislikes, fears or desires is profound.

So where does this leave AR startups?

The impact of Facebook’s F8 conference will likely have been the source of much internal discussion within existing AR companies like Blippar and Snap.

Whilst the idea of the camera being the first AR platform is is a vision most Snapchat users are familiar with (the similarities to Snapchat’s S1 have not been missed), Facebook does have the advantage of having a superior network of users on their side.

In less than a month, Google will host its annual I/O conference, where I’m sure the tech giant will reveal similar ideas that the company has been working on. This will surely include more information from the team working on Tango — Google’s AR smartphone. A month after that, Apple will be forced to show its cards at its annual developer conference.

As Facebook is primarily a software/platform company, this could be an opportunity for Facebook to revive some aspects of the Facebook phone it experimented with several years ago, in order to maintain an element of control at the device level. Releasing a dedicated ‘AR Camera’ app could be a good way to give Facebook the freedom to release AR experiments in a way which wouldn’t diminish the overall user experience of the already crowded Facebook app. However, this decision could also be at risk of cannibalizing the engagement of existing camera applications, such as Instagram or Messenger.

The elephant in the room, is that by externally promoting an ‘open ecosystem’, whilst in reality tying everybody into the Facebook platform, Facebook has the potential to provide the catalyst the AR industry needs, whilst suffocating competition at the application level before it even starts. This is why it is now more important than ever for AR companies to collaborate and promote open standards as opposed to locking both developers and consumers into walled gardens.

I expect to see AR software companies like Blippar & Niantic collaborate more with hardware manufacturers & strategic partners, providing the opportunity for both parties to grow outside of the bubbles of Facebook, Google or Apple.

Conclusion

It’s clear from the keynote that Facebook is going all in on augmented reality and in terms of significance, this is right up there with Facebook’s acquisition of Oculus.

What we saw at Facebook’s F8, was a glimpse into some of the technologies which will required to kickstart the next epoch in personal computing.

As the co-founder of an AR company myself, it gives me confidence to see Facebook supporting the medium in such a strong way.

At Scape Technologies, we’re building core infrastructure to connect the physical world with digital services or content, whether for augmented reality or self-driving cars. We believe this will be one of the fundamental technologies required to empower an entirely new set of industries in the years to come.

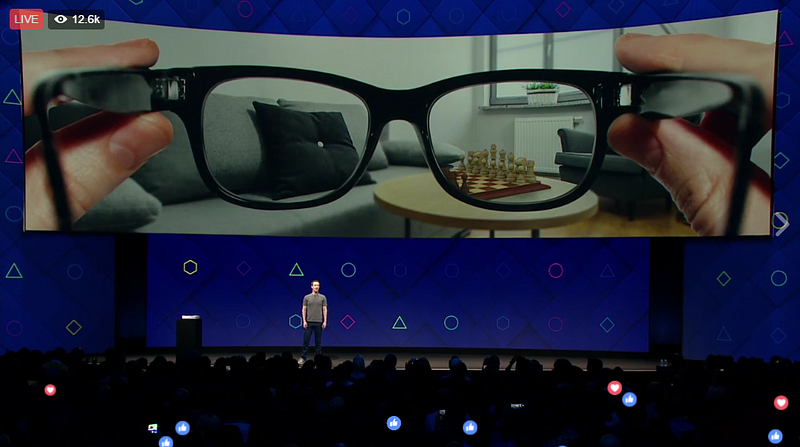

Following this belief, a few months ago I gave a TEDx talk on the challenges AR needs to overcome before it could live up to expectations. These challenges include improved tracking & object recognition algorithms, robust server-side mapping & localisation infrastructure, longer battery life, miniaturized components, eye-tracking, speech recognition, brain-computer interfaces, brand-new optical displays, distribution platforms, new operating systems & killer applications.

And all of this needs to be wrapped up inside a portable & stylish device we’d feel comfortable using around our friends without fear of ridicule or social rejection. We have a long way to go.

By Zuckerberg’s own admission, the journey towards this imagined future is not even 1% complete. In optimising for the camera first, companies like Facebook and Snap are ensuring that the industry isn’t waiting on the likes of Hololens or Magic Leap before being able to build the AR ecosystem.

In the same way Facebook’s acquisition of Oculus provided the kickstart the VR industry needed, the clear demonstration of commitment at this year’s F8 will do the same for augmented reality.

If indeed AR is going to be the technology which replaces the mobile phone in your pocket or the computer on your desk, there will be a long and fruitful road ahead for companies willing to have their say in shaping the future of this exciting new medium.