If I told you to meet w me - please do it b4 next class- do it ASAP-

2

If you are coming to class w nothing on your blog page you are taking zeros....

I grade everyone the same-

Show up to class w 4 hours of work - I need to see it on ur blog page

ARKit and ARCore will not usher massive adoption of mobile AR

The AR Cloud Will.

With the release of Apple’s ARkit Today and Google’s ARCore — creating Augmented Reality (AR) apps became a commodity over night. It’s free, it’s cool, and it just works.

So far the “mass adoption” is mostly among AR developers and its generating tons of YouTube views. But developers are yet to prove their apps can break through the first batch of novelty apps and gain mass user adoption.

For sure, there will be a couple of mega-hits (e.g. Pokemon Go) that will enjoy the newly made mega-distribution channel for AR apps on iPhones and on high-end Android phones. But, I do not anticipate hundreds of millions using AR Apps all day, every day.

ARKit & ARCore-based AR apps are like surfing the web with no friends. It’s so 1996.

Don’t get me wrong, the ARKit release today is the best thing that happened to the AR industry, but massive adoption of AR apps will take more than that. It will happen with The AR Cloud — when AR experiences persist in the real world across space, time, and devices.

I was hoping the iPhone X will introduce a key ingredient for massive AR adoption — not the ARKit — but rather a back facing depth camera that will put in the hands of tens of millions a camera that senses the shapes of your surroundings and can create a rich accurate 3D map of the world to be shared by users and for users — on The AR Cloud. That hasn’t happened yet, hopefully it will in the next version. This will only slow down the creation of the AR Cloud — but it can not stop the demand for it.

The great pivot of 2017

In the past couple of years, with growing awareness to AR and new mobile devices catching up to AR’s hungry computing needs — many startups have been working on AR Tracking capabilities similar to ARkit and ARCore. But since the 1–2 punch Apple-Google announcements, these startups have been scrambling to adapt their positioning in order to keep surfing this hot new wave. The natural pivot is typically towards cross-platform capabilities. On the one hand it’s a logical move up the stack, but on the other hand — how big is the opportunity for this additional thin layer? And isn’t Unity already providing that cross platform capability (build-once-deploy-on-many)? Won’t these startups need to offer beefier tools, services, and content to justify their existence in a new layer?

I tend to look for the positive outcome following such dramatic announcements so I immediately thought: Kit&Core finally freed tech AR startups from the need to tackle this hard problem, and allowed (the clever ones) to move up the value chain and focus on a much more lucrative prize: The AR Cloud.

AR researchers and industry insiders have long envisioned that at some point in the future, the realtime 3D (or spatial) map of the world, The AR Cloud, will be the single most important software infrastructure in computing, far more valuable than facebook’s Social graph or Google’s page rank index.

I’ve been working in Augmented Reality (AR) for a decade now, and the company I co-founded in 2009 (Ogmento->Flyby) was acquired by Apple to become the foundation for ARkit; interestingly, prior to that acquisition, the same tech was licensed into Google Tango as well. I also co-founded AWE (conference dedicated to AR) and Super Ventures (fund dedicated to AR) so I have a pretty broad view of the AR industry and am always hunting for areas where AR can generate epic value.

This post is intended to draw attention to the value that can be created in the AR Cloud beyond ARKit and ARCore.

For a deep-dive on ARKit, ARcore and their implications — look no further than the excellent (and now famous) post series by my partner at Super Ventures — Matt Miesnieks.

For articles about collaboration in AR — check out my other Super Ventures partner and one of the worlds’ leading experts in AR Collaboration — Mark Billinghurst’s post

Why do we need the AR Cloud?

In a nut shell, with the AR Cloud, the entire world becomes a shared spatial screen enabling multi user engagement and collaboration.

Most apps built on Kit&Core will be single user AR experiences which limit their appeal. YouTube is sure to bloat with overabundance of shared videos boasting AR special effects, and other AR parlor tricks.

But sharing a video of an AR experience is not like sharing the *actual* experience. To borrow from Magritte: this is not an actual pipe.

The AR Cloud is a shared memory of the physical world and will enable users to have shared experiences, not just shared videos or messages. It will allow people to collaborate in play, design, study, or team-up to problem solve — anything in the real world.

Multi user engagement is a big part of the AR Cloud. But an even bigger promise lies in the persistence of information in the real world.

We are on the verge of a fundamental shift in the way information is organized. Today, most of the world’s information is organized in digital documents, videos and information snippets, stored on a server, and ubiquitously accessible on the net. But it requires some form of search or discovery.

Based on recent Google stats, over 50% of searches are done on the move (searched locally). There is a growing need to find information right there where you need it — in the now.

The AR Cloud will serve as a soft-3D-copy of the world and allow to reorganize information at its origin — in the physical world (or as scientists call it in Latin: in-situ).

With the AR Cloud, the how-to-use of every object, the history of any place, the background of any person — will be found right there — on the thing itself.

And whoever controls that AR cloud could control how the world’s information is organized and accessed.

When will this happen?

Won’t the AR Cloud be commoditized before startups have a chance at it like what happened with the ARKit?

The AR Cloud is not for the faint of heart. Industry leaders are imploring developers, professionals and consumers alike to have patience as it’ll take a while for this to materialize. But as investors in AR and frontier tech — this is the time to identify the potential winners of this long distance race.

As reference, let’s take a quick look at the timeline for the AR tracking technology that was just commoditized with ARKit and ARCore:

- First point cloud conceived: 19th century

- First realtime SLAM using computer vision by Andrew Davidson: 1998

- First mono-slam demonstrated on the iPhone by George Klein: 2008

- First AR dedicated device announced — Project Tango: 2012

- SLAM on iPads with Occipital Structure Sensor: 2013

- Flyby demonstrates ARKit-like capabilities: 2013

- ARkit and AR Core announced: 2017

Looking at this short history lesson — the trajectory points to 3 or more years before a mature AR Cloud service is widely available.

In the meantime — startups can tackle parts of the overall vision, take a first mover advantage and build services for specific needs (such as for Enterprises).

Aren’t there any AR Clouds yet?

In the past decade, several companies have been providing AR services from the cloud, starting with Wikitude as early as 2008, then Layar, Metaio (Junaio), and later Vuforia, Blippar, Catchoom and now new entrants — see AR Landscape. But these cloud services are typically one of 2 types:

- storing GPS or location-related information (for displaying a message bubble in a restaurant) or

- providing image recognition service in the cloud to trigger AR experiences.

These cloud services have no understanding of the actual scene and the geometry of the physical environment, and without it — it’s tricky to blend virtual content with the real world in a believable way, let alone share the actual experience (not just a cool video) with others.

Wait, what about Pokemon Go’s mass appeal?

The incredible reach of Pokemon Go was a fluke, an outlier, a one-of-a-kind. It will be super hard if not impossible to replicate its success with similar game mechanics. The game servers store geo-location information, hyper local imagery, and players’ activity — but not a shared memory of the physical places in which its 65 million monthly active users are playing. Thus, no real shared experience can occur.

For that it would need The AR Cloud.

What is The AR Cloud?

Several scientists conceived different aspects of the AR Cloud since the 1990’s, but I have yet to see a concise description for the rest of us. So here is my simplified version in the context of the AR Industry.

An AR Cloud system should include :

1) A persistent point cloud aligned with real world coordinates — a shared soft-copy of the world

2) The ability to instantly localize (align the world’s soft-copy with the world itself) from anywhere and on multi devices

3) The ability to place virtual content in the world’s soft-copy and interact with it in realtime, on-device and remotely

Let’s break it down:

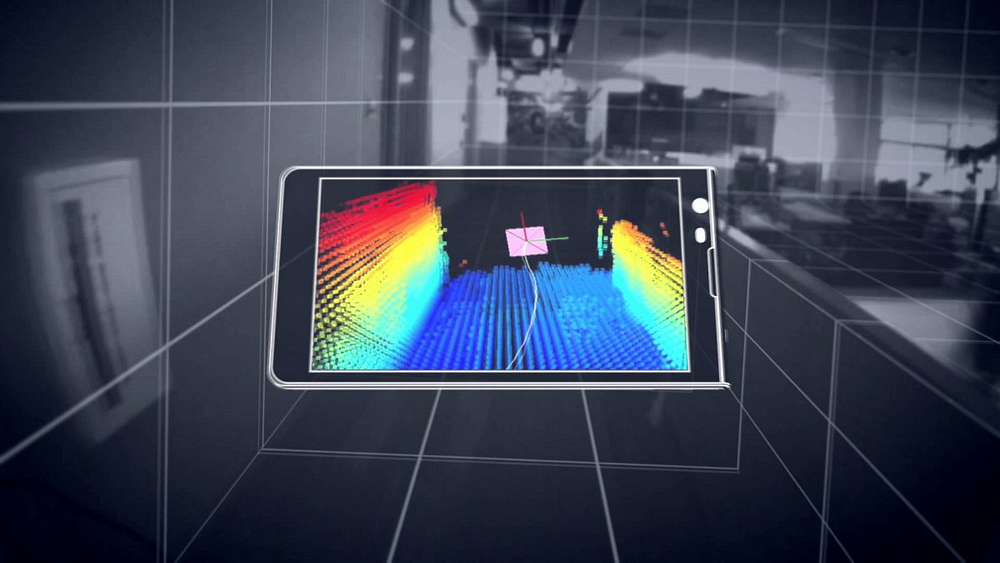

1) A Persistent Point cloud aligned with the real world coordinates

A point cloud as defined in wikipedia is “a set of data points in some coordinate system (x,y,z)” and by now is a pretty common technique for 3D mapping and reconstruction, surveying, inspection, and other industrial and military uses. Capturing a point cloud from the physical world is a “solved problem” in engineers’ jargon. Dozens of hardware and software solutions have been around for a while for creating and processing point clouds using active laser scanners such as LiDAR, depth or stereo cameras such as Kinect, monocular camera photos/drone footage/satellite imagery processed with photogrammetry algorithms, and even using synthetic aperture radar systems (radio waves) such as Vayyar or space-borne radars.

For perspective, Photogrammetry was invented right along with photography — so the first point cloud was conceived in the 19th century.

The crowd-sourced AR Cloud

To ensure it has the widest coverage and always keeps a fresh copy of the world, a persistent point-cloud requires another level of complexity: the cloud database needs to have a mechanism to capture and store a unified set of point clouds fed from various sources (including mobile devices) and its data needs to be accessible for many users in realtime.

The solution may use the best native scanning or tracking mechanism on a given device (Kit, Core, Tango, Zed, Occipital, etc) but must store the point cloud data in a cross platform accessible database.

The motivation for users to share their personal point clouds will be similar to the motivation Waze users have: get a valuable service (optimal navigation directions), while in the background sharing information collected on your device (your speed at any given road segment) to improve the service for other users (update timing for other drivers).

Nevertheless, this could pose serious security and privacy concerns for AR mapping services, since the environments mapped would be much more intimate and private than your average public road or highway. I see a great opportunity for crypto-point-cloud startups.

Depth cameras accelerate the creation of the AR Cloud

Depth cameras help create 3D maps in a big way. In fact, it’s such a critical component that without it a regular smartphone can’t contribute much to a 3D map.

With a depth camera, you achieve better understanding of the scene’s geometry. The algorithms may work similarly to how they work on a regular smartphone camera, but the captured point clouds will be more dense and accurate and result in a much higher quality AR Cloud.

A few devices offered that capability before but never saw mass adoption: Tango was an experiment with a few thousands units sold; The Occipital sensor sold a few thousand units; The Tango-based Lenovo Phab 2 Pro launched in 2017 is the first commercial smartphone with a depth camera but has only modest adoption, and the new Asus ZenFone AR is slicker but still marginal.

The iPhone X still doesn’t have a depth camera — once it does — it will offer an exponential value to users. A lot of users.

Multi-user is not new

The multiuser realtime access requirement of the AR Cloud is a somewhat similar concept to the server capability at the heart of any MMO (massively multiuser online) — allowing a massive number of user to access the information from anywhere allowing real time collaboration. Except in an MMO you’ll find a fully synthetic persistent open world stored along with the characters, storyline and the content, while in the AR cloud the real world isthe open world — and the point cloud along with augmented information is perfectly aligned with it.

3D maps are not new

3D Maps are already in wide use for autonomous cars, robots and drones. Even autonomous vacuum cleaners such as the Roomba use these 3D maps for navigation and are even considering to share these maps.

The AR Cloud is special

But the point cloud for AR is not your average point cloud — it needs to be AR-friendly. If a 3D map is too dense it’ll be too slow for processing on mobile devices where users expect instant response. If the map is partial it may not be sufficient for localization from any angle and thus break the experience for a user. In other words, an AR Cloud is a special case of point clouds with its own set of parameters.

Google Tango calls it “Area Learning — which gives the device the ability to see and remember the key visual features of a physical space — the edges, corners, other unique features — so it can recognize that area again later.”

The AR Cloud’s requirements may vary indoors and outdoors or whether it’s used for enterprise or consumer use cases.

But the most fundamental need is for fast localization.

2) Instant localization from anywhere on multiple devices

Localizing means estimating the camera pose which is the foundation for any AR experience.

In order for an AR app to position virtual content in a real world scene the way it was intended by its creator, the device needs to understand the position of its camera’s point of view relative to the geometry of the environment itself.

To find its position in the world, the device needs to compare these key feature points in the camera view with points in the AR Cloud and find a match. To achieve instant localization, an AR Cloud localizer must narrow down the search based on the direction of the device and its GPS location (as well triangulation from other signals such as wifi, cellular signal etc). The search could be further optimized by using more context data and AI.

Once a device is localized, ARKit or ARCore (using the IMU and computer vision) can take over and perform the stable tracking — as mentioned before, that problem is already solved and commoditized.

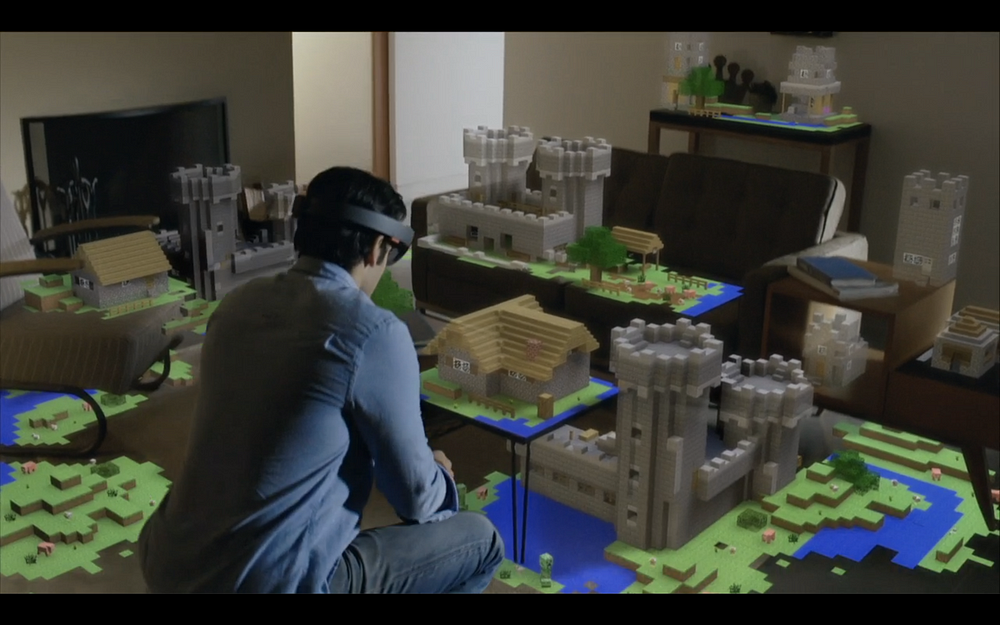

Dozens of solutions exist today to localize a device such as Google Tango, Occipital Sensor, and of course ARKit and AR Core. But these out-of-the-box solutions can only localize against a local point cloud, one at a time. Microsoft Hololens can localize against a set of point clouds created on said device — but (out-of-the-box) it can’t localize against point clouds created by other devices.

The search is on for the “ultimate localizer” that can localize against a vast set of local point clouds from any given angle and can share the point cloud with multiple cross platform devices. But any localizer will be only as good as its persistent point-cloud which is a great motivation to try and build both.

3) Place and visualize virtual content in 3D and interact with it in realtime on device and remotely

Once you have a persistent point cloud and the ultimate localizer — the next requirement to complete an AR Cloud system is the ability to position and visualize virtual content registered in 3D. “Registered in 3D” is the technical jargon for “aligned in the real world as if it’s really there”. The virtual content needs to be interactive so that multiple users holding different devices can observe the same slice of the real world from various angles and interact with the same content in realtime.

“God Mode”

A crucial feature to manage and maintain an AR Cloud and its applications is God Mode: the ability to remotely place 3D content (+ interactions) in any corner of the entire point cloud. This is somewhat similar to games such as Black and White which allow the player to play God: pick a location on a map, view a scene from different angles, monitor the activity in realtime, and be able to mess around with it. This could be done on a computer, tablet, or other devices. These type of tools will define a new category of content management systems for the real world.

That concludes the 3 requirements of The AR Cloud. Sounds hard. Is there anyone capable of building it today?

Who can build it?

The AR Cloud is such a mega project that perhaps 3 companies in the world have deep enough pockets and sufficiently big ambitions to tackle this.

Apple, Google, and Microsoft could each some day pull off the full AR Cloud. Actually, if they don’t they will get screwed by whoever does.

But if history teaches us anything is that it’s going to take a (crazy) startup to put together the building blocks and prove the concept. Because only a startup can have the audacity to believe it can build it.

It’ll take a crazy good startup.

Since it’s too big for one startup, we may see different startups tackling different parts of it. At least at first.

Crazy good startups who tackled parts of the AR Cloud and got acquired

- 13th Lab (acquired by Facebook)

- Obvious Engineering/Seene (Snap)

- Cimagine (Snap)

- Ogmento->Flyby (Apple). Flyby also licensed its tech to Tango.

- Georg Klein* (Microsoft).

*Georg is a person not a startup but has single handedly outdone many companies. See his first ARKit-like demo on the iPhone 3G in 2009!

Startups currently tackling the AR Cloud or parts of it:

YOUar (Portland)

Scape (London)

Escher Reality (Boston)

Aireal (Dallas)

Sturfee (Santa Clara)

Paracosm (Florida)

Fantasmo (Los Angeles)

Insider Navigation (Vienna, Austria)

InfinityAR (Israel)

Augmented Pixels (Ukraine/Silicon Valley)

Kudan (Bristol, UK)

DottyAR (Sidney Australia)

Meta (Silicon Valley)

Daqri (Los Angeles)

Wikitude (Vienna, Austria)

6D.ai (San Francisco)

Postar.io (San Francisco)

<Your startup here!>

Many of these players will be at our AWE Events so you can see them in action.

What are the big players doing about it?

1) Apple

Apple’s CEO Tim Cook has been touting AR “Big and Profound” and Apple seems to have big ambitions with AR.

ARkit was a nice low hanging fruit for Apple. They had it running in house (thanks to the Flyby acquisition) and had in house expertise to productize it (thanks to the Metaio acquisition). Apple reportedly made the decision to publish the ARKit just 6 months prior to ARKit’s launch at WWDC this year.

A future depth camera equipped iPhone could be a turbo charger for the creation of rich, accurate point clouds and while doing so defining a new standard feature for smartphones. But we will still have to wait for that.

But besides 3D scanning of streets for its autonomous car project, the 2D mapping and positioning efforts for its maps, and the “thousands of engineers working on AR” it doesn’t look like Apple is ready to take on the creation of a full blown AR Cloud just yet. It might be due to privacy & security concerns around the data. Or perhaps they are hiding it well, as they tend to do.

2) Google

The AR Cloud is Google’s natural next step in “organizing the world’s information”. Google probably possesses more pieces of the puzzle to deliver on this mega project than anyone else, but I am not holding my breath.

Tango launched as a skunk work project in 2013 with a vision that included 3 elements:

- Motion tracking (using visual features and accelerometers/gyro) — this was spun out as AR Core.

- Area learning — “Area Learning gives the device the ability to see and remember the key visual features of a physical space — the edges, corners, other unique features — so it can recognize that area again later, and storing environment data in a map that can be re-used later, shared with other Tango devices, and enhanced with metadata such as notes, instructions, or points of interest.

This sounds pretty close to the AR Cloud vision, but in reality Tango currently stores the environment data on the device, but doesn’t have the mechanism to share it with other tango devices or users. The Tango APIs do not natively support data sharing in the cloud.

- Depth perception: detecting distances, sizes, and surfaces in the environment — this requires the Tango cameras.

Google announced at I/O this year a new service that points to another piece of the AR Cloud dubbed the Visual Positioning Service (VPS), however based on developers inquiries it’s fair to classify it as “very early stage”.

Of course Google has already built a pretty elaborate 3D map (for outdoors) thanks to its street view cars. Google may all the AR Cloud pieces together within a few years. But Google API tends to be limited and may not be good enough for an optimal experience.

So Google is a natural top contender to own the AR Cloud, but unlikely to be the best or even the first.

3) Microsoft

Microsoft CEO’s revitalized focus has been clearly communicated on the Cloud and on Holographic Computing. With the Hololens, the most complete AR glasses system to-date (though still bulky), Microsoft has delivered a fantastic capability to create point clouds that persist locally on the device. However, it’s not capable to share it natively across multiple devices. Since Microsoft had no good hand to play in the current mobile AR battle, it skipped a generation straight to smartglasses and is leading the pack. But because of the anticipated slow adoption of smartglasses — their AR Cloud may not see much demand for a while.

4) Facebook, Snap and perhaps Amazon/Alibaba/Tencent may have that ambition as well but are years behind. (a great opportunity for some of the startups above to get acquired 😃)

5) Other contenders include Tesla, Uber, and other autonomous cars and robotics companies which are already building the 3D map of the outdoors or indoors real world — but without necessarily a special focus on AR.

An opportunity to build the infrastructure of the future

The AR Cloud landscape spells the biggest opportunity in AR to grow a Google-size startup, since Magic Leap. Speaking of Magic Leap — it used to have the ambition to build the AR Cloud. Do they still have it? Should we care?

In addition, none of the above has an incentive to build a cross platform solution (except for possibly Facebook) therefore — a startup has another meaningful advantage to take the lead in creating The AR Cloud.

This post wasn’t intended to define the requirements of an AR Cloud like you’d find in an RFQ…just to reflect how I envision it but we are looking forward to be surprised by how the ecosystem approaches it.

If you are passionate about building, using, or investing in The AR Cloud — talk to us — we should partner.

— — — — — — — — — — — — — — — — — — — — — — — — — — — — — — —

For follow up/future posts:

Once the AR Cloud is in place — what can you do with it?

Specialization in different parts and services of the AR Cloud

What will the App Store of the AR cloud look like

Is there a chance for an independent AR Cloud provider? Can we afford not to have an independent cloud provider?

We have one reality: Who should manage and maintain the content associated with it? a global regulated registry a la DNS?

References

Learning from 20 years of MMOs - Anything by Raph Koster — see his website

Information about point cloud hardware and software solutions

Background about The World in 3D from the Naval Postgraduate School.

Mixing realities in Shared Space: an augmented reality interface for collaborative computing — Whitepaper by Mark Billinghurst

What’s the ROI for a point cloud in construction?

First realtime SLAM using computer vision: 1998 Andrew Davidson under David Murray

Why 3D Maps for AR will be worth more than Google Maps